However, although risk levels are determined on a system basis, organisations must integrate AI management into their risk management, compliance, and governance frameworks. This ensures the AI system continues to achieve its goals and avoids falling into a different risk category.

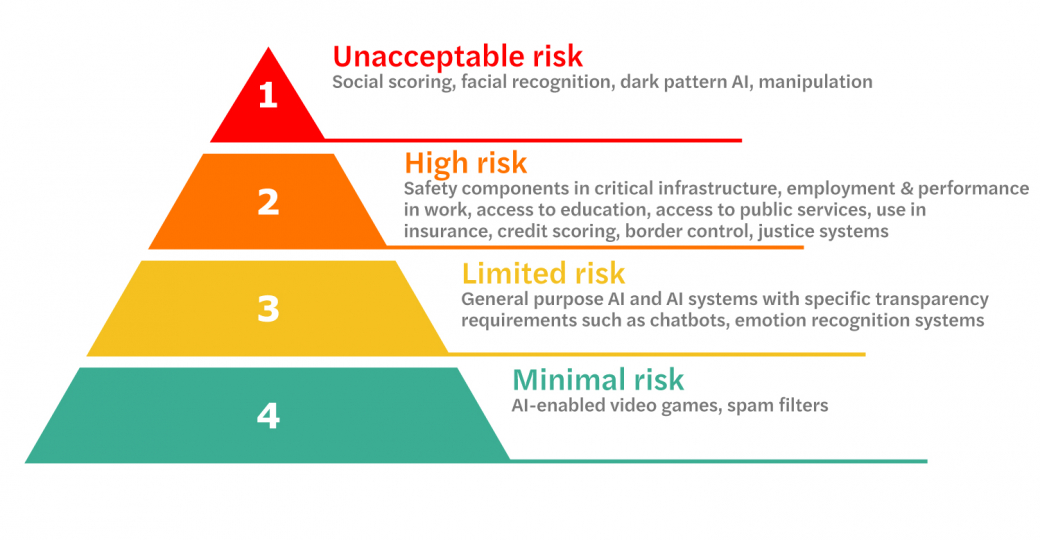

The EU AI Act recognises four different levels of risk associated with AI systems:

- Unacceptable risk

- High risk

- Limited risk

- Minimal or no risk

Depending on the circumstances and specific application, use, and level of technological development, artificial intelligence can pose risks and cause harm to public interest and fundamental rights.

1. Unacceptable risk

Unacceptable risk refers to AI systems that contradict European Union values of respect for human dignity, freedom, equality, democracy, and the rule of law and Union fundamental rights. Based on this, AI systems that fall under this category are prohibited.

Examples of unacceptable risk AI systems

The following AI systems are prohibited under the AI Act:

- AI systems can manipulate and persuade persons to engage in unwanted behaviours or make decisions they otherwise would not have.

- AI systems that exploit vulnerabilities of a person or a specific group of persons due to their age, disability, or a specific social or economic situation.

- Systems that are biometric categorisation systems based on individual’s biometric data e.g. using an individual person’s face or fingerprint to deduce or infer an individual’s characteristics, e.g. political opinions, religious race, or sexual orientation.

- ‘Real-time’ remote biometric identification systems used in publicly accessible spaces for the purpose of law enforcement (some exceptions apply).

- Systems that evaluate or classify natural persons or groups over a certain period based on their social behaviour or personality characteristics, commonly known as social scoring.

- AI systems that detect the emotional state of individuals in situations related to the workplace and education should be prohibited.

Note that fines in this category are capped at 7% of global turnover or €35m.

2. High risk

AI systems identified as high-risk should be limited to those that have a significant harmful impact on the health, safety, and fundamental rights of persons in the Union. These systems must comply with certain mandatory requirements to mitigate risks.

Providers and deployers of high-risk AI systems have a number of obligations, including risk management, human oversight, robustness, accuracy, ensuring the quality and relevance of data sets used, cybersecurity, technical documentation and record-keeping and the transparency and provision of information to deployers.

High-risk AI systems, as per Annex III of the Act:

- Biometrics, including remote biometric identification systems, biometric categorisation, and emotion recognition systems.

- Safety components in critical infrastructure, e.g. road traffic, water, gas, and electricity.

- Access to education and vocational training and evaluation of performance.

- Access to employment, recruitment and promotion and evaluation of employees in the workplace, where outputs may result in decisions that impact conditions of work.

- Essential private and public services, including credit scoring, risk assessing and pricing in health and life insurance.

- The remainder all relate to law enforcement, migration and the judicial system.

It is also worth noting that the European Commission is responsible for making changes to Annex III and adding new systems to the list of High-risk AI. The criteria to identify a high-risk AI system is flexible and can be adapted.

Annex II of the Act also lists harmonising legislation under which AI systems may also be classified as high risk. All of these are product safety legislation, such as the medical device directive.

3. Limited / transparency risk

Limited risk is concerned with the lack of transparency in the use of AI. Therefore, it is important that AI systems that directly interact with people, e.g. chatbots and deepfakes, are developed to ensure that the person is informed that they are interacting with an AI system.

Note that limited risk applies if one or more of the following criteria are fulfilled where the AI system is intended:

- To perform a narrow procedural task.

- To improve the result of a previously completed human activity.

- To detect decision-making patterns or deviations from prior decision-making patterns and is not meant to replace or influence the previously completed human assessment without proper human review.

- To perform a preparatory task to an assessment relevant for the use cases listed in Annex III.

General-purpose AI has also been added to the list of limited-risk AI systems and now carries a number of additional obligations under the Act. These systems will most likely be limited to the largest AI developers and in the short term at least will not be the focus of the majority of organisations.

4. Minimal or no risk

Minimal or no-risk AI systems are those AI systems that do not fall into the three categories above. As a result, there are no requirements to meet any obligations as the AI Act focuses mainly on those AI systems that fall under the high-risk and limited-risk categories. AI-enabled video games or spam filters fall under this category. However, any AI system, through its use, development, training, expansion, etc., may change so that it falls into another risk category. Therefore, proper governance is essential regardless of the AI system used.

Conclusion

This risk-based approach means that organisations need to identify the AI systems they are using or plan to use and categorise the associated risk level. An AI inventory is a good starting point for this. Once the risk level is understood, an AI Act gap assessment is then required to identify how existing processes in the organisation can be leveraged to enable compliance with the AI Act, and a roadmap can be built. Public sector organisations also need to take into account guidelines from the Government on the responsible use of AI.